Why we should thank Karen Spärck Jones for search engines

The pioneering work of a computer scientist from Yorkshire means I can find a pizza takeaway whenever I want

With each update that Google rolls out, the search algorithm becomes more and more sophisticated. It’s easy to forget that the fact a computer can understand what you type into that search bar and serve up a relevant piece of content is pretty impressive.

Modern search engines are able to work today because of the pioneering work of Karen Spärck Jones. Without her, I wouldn’t be able to search for “pizza open now” and get an exhaustive list of takeaway restaurants in less than one second.

Search engines need to know what a webpage is about

Lots of factors affect how Google judges the quality of a piece of content, and where it positions your site on the results page. But to begin with, the search engine needs to be able to understand what your content is about. That way it can file it under the right terms, and later match it to a search query.

Think of it like a library. You have to know more or less what a book is about to put it in the right section so people can find it easily.

Human readers can skim a text and get the main gist of what it’s about. But it’s not that simple for a computer.

Rare words (usually) = meaningful words

Search engines figure out the main topic of a text by looking at how often it uses words in comparison to the average text. The logic behind this system is that the more common a word is, the less important it is. By looking at the rarest words a text uses, the search engine can identify the most important words in a text, and thus understand what the text is about.

Let’s look at an example. Say I was writing a post about my most recent obsession, Cuban salsa.

I’ll probably use some common words like “a” and “the” a lot. I'll also use some more specific, rarer terms like “dancing”, “salsa”, and “Cuban salsa”. The search engine will rank the words in my text in order of importance, based on the rule that the rarer a word is, the more important it is.

“a”, “the”, “and” – common, not important

“Dancing”, “salsa” – fairly rare, pretty important

“Cuban salsa” – even rarer, most important

Karen Spärck Jones’ term frequency model

But rarity isn’t the only thing that makes a word important to the meaning of a text. Frequency (how often it’s used) also matters.

Karen Spärck Jones was the programmer who created the scientific method search engines use to figure out which words are important. Her model calculates and combines the rarity of a term with how often it appears in a text. It's got a pretty catchy name: “Term Frequency-Inverse Document Frequency” – or tf-idf for short.

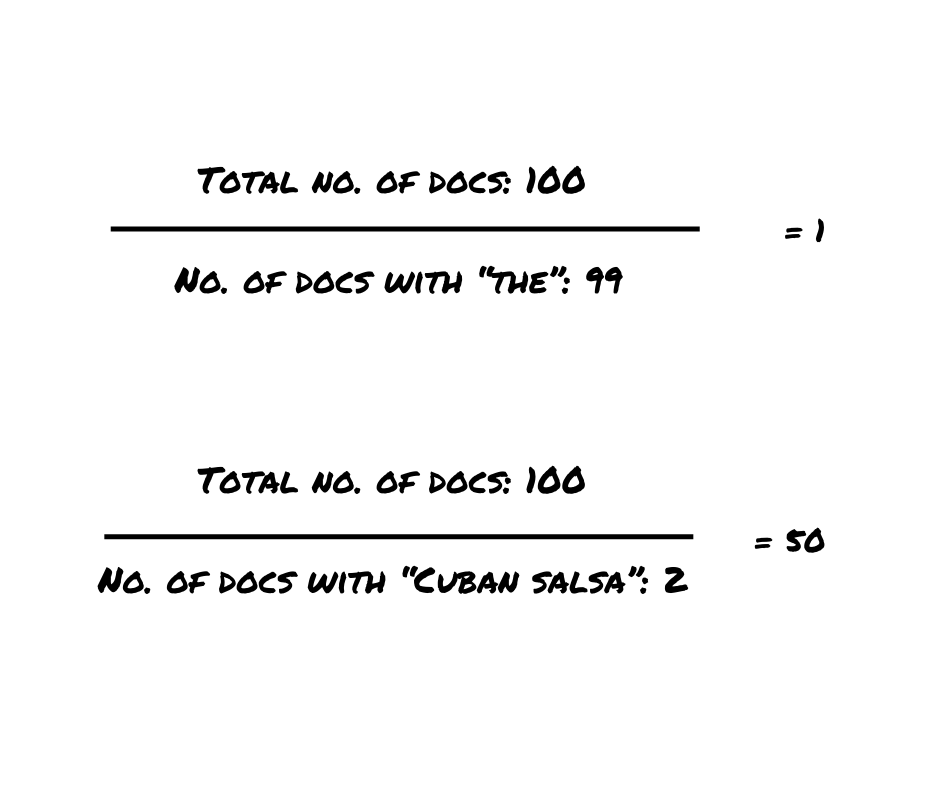

‘Inverse document frequency' looks at how often a term appears on all other pages. You get an idf score by dividing the total number of documents in a set (in this case that set is all the webpages on the internet) by the number of documents with the term you're investigating. Rare words get higher scores.

The ‘Term frequency’ part identifies how often a term shows up in a piece of text. If a word is used a lot in a text, it’s probably important to the topic of that text.

For instance, my article might mention “Havana” once or twice. While ‘Havana’ is a rare (therefore important) term, it’s not used anywhere near as much as ‘Cuban salsa’, so we know ‘Havana’ isn’t the main topic of the text.

Based on Karen Spärck Jones’ formulas, search engines combine the tf and idf scores for a text to find the most important terms. Because ‘Cuban salsa’ is a term with a really high idf score and a pretty high tf in my article, my post will get a high tf-idf score for that term. The search engine will understand that the topic of my text is ‘Cuban salsa’.

The tf-idf scores allow Google to file my article in the right place in the Google index (the massive database where Google stores The. Entire. Internet). Later, when someone searches for ‘Cuban salsa’, it’ll retrieve my piece and compare it against all the other relevant articles. If Google considers my piece to be the ‘most helpful’ article for a specific searcher, I’ll rank top.

A pioneer of Natural Language Processing

But Spärck Jones’ work didn’t start with her term frequency model. Her earlier research, in the 1960s, focused on the problem of how to program computers to understand human language. Given the fact that so many words have multiple meanings depending on the context, overcoming this problem was no easy feat.

Spärck Jones suggested that a way to get computers to understand natural language is to group related words together. For instance, the word ‘pass’ can have several meanings. But if it’s surrounded by terms like ‘exam’, ‘mark’, and ‘grade’, it means ‘succeed’. Whereas if it’s in a paragraph with ‘players’, ‘ball’, and ‘goal’, it means to change possession.

Her work formed a lot of the foundation for natural language processing (NLP) technology that we use today. It’s the technology that tools like Grammarly, Google Translate, and Siri need to function.

So, Spärck Jones didn't just enable our 1 am pizza orders. We should also thank the Yorkshire-born programmer for the fact that we can effortlessly fix spelling, order a coffee in another language, and get voice-activated weather updates.